Hidden Factors Affecting Your Corrosion Test Results: Expert Analysis

The numbers from corrosion tests paint a shocking picture. Every year, corrosion damage costs $2.5 trillion, which is approximately 3.4% of the world’s GDP. The situation gets worse. About 20% of all steel produced worldwide simply rusts away each year. This makes accurate corrosion testing crucial for industries everywhere; however, these tests don’t always yield reliable results.

Engineers use several methods to test corrosion. Salt spray corrosion tests, electrochemical testing, immersion testing, and cyclic corrosion testing help us understand how materials behave. The accuracy of these tests depends on many hidden factors. This becomes particularly important for stainless steel products made through powder metallurgy and metal injection molding. While these tests want to predict material resistance, estimate component lifecycles, and review performance in specific environments, the results can mislead us if we overlook key factors. Testing needs different approaches because corrosion comes in many forms. It ranges from surface degradation to environmentally assisted cracking that can cause catastrophic failures – often without any warning signs.

Environmental Variables That Skew Corrosion Test Results

Environmental factors are the foundations of corrosion tests. These factors create unpredictable variables that can drastically change test results. Engineers often misinterpret a material’s true corrosion resistance because similar materials tested under seemingly identical conditions yield different outcomes.

Humidity Fluctuations in Salt Spray Corrosion Test Chambers

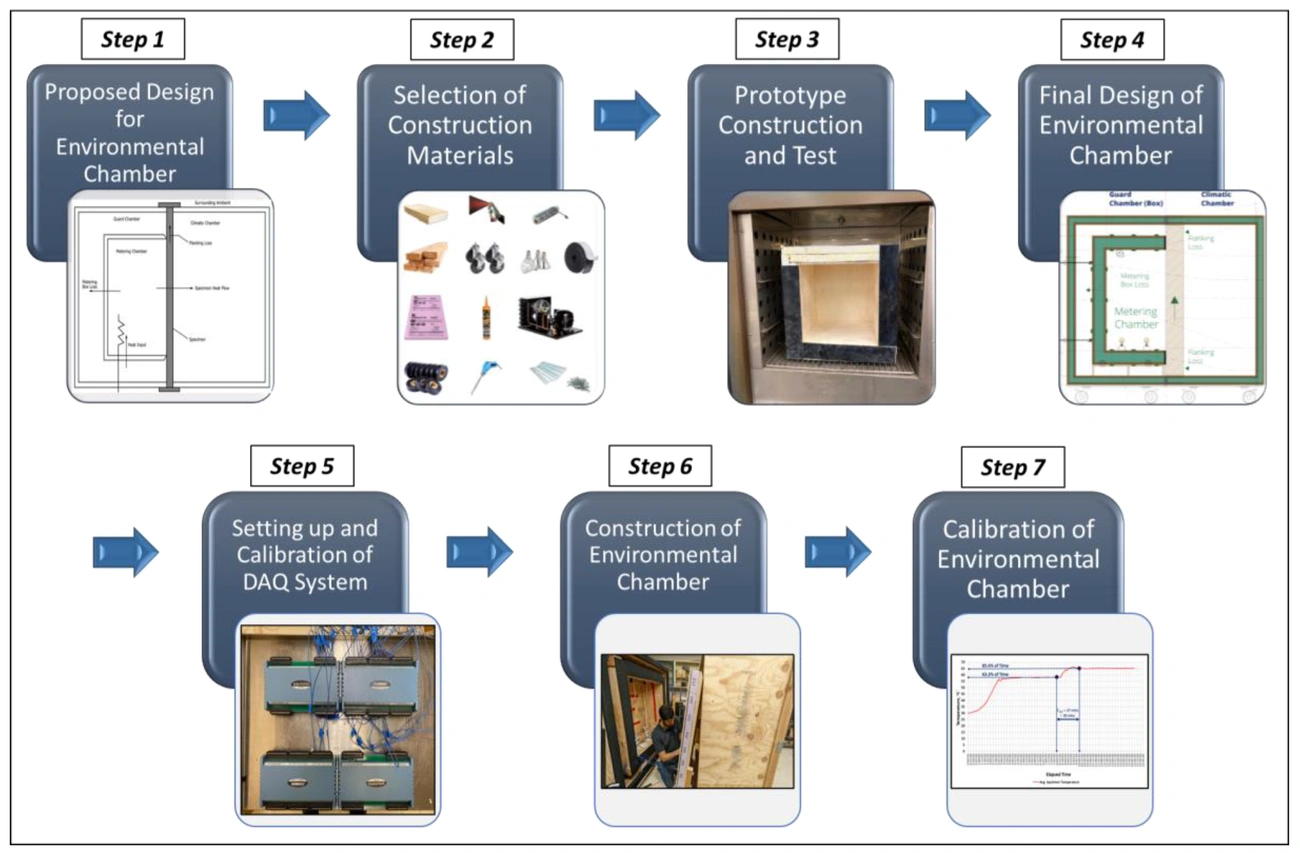

Relative humidity affects corrosion rates in test chambers more than any other factor. Modern salt spray chambers keep humidity between 20-100% RH, and their control systems can achieve 0.1% RH resolution. Notwithstanding that, even the best chambers show humidity deviations of ±2% RH and fluctuations of ±2% RH during testing cycles. These small variations lead to major differences in test results.

These fluctuations become a big problem, especially when you have powder metallurgy and metal injection molded components, as humidity directly affects how electrolyte films form on metal surfaces. Research shows corrosion rates follow a power law relationship with relative humidity (known as the Peck relation). Small humidity changes can exponentially affect corrosion rates. A critical relative humidity threshold exists below which corrosion barely occurs. This makes consistent humidity vital for reliable test results.

Impact of Ambient Temperature on Electrochemical Measurements

Temperature changes highly affect electrochemical corrosion measurements in multiple ways. Controlled studies showed that raising the temperature from room temperature to 80°C caused:

- Open circuit potential (Eocp) change in the negative direction

- Corrosion current density increases from 30.7 to 110.2 μA/cm²

- Polarization resistance drops from 637.3 to 272.6 Ω•cm²

Test chamber temperature fluctuations (typically ±0.5°C) change reaction kinetics according to the Arrhenius relation. A 10°C rise can double corrosion rates. Temperature uniformity issues (±2°C across chambers) create localized corrosion cells that affect measurement accuracy.

Temperature and corrosion don’t always have a linear relationship. At certain thresholds, powder metallurgy products show reduced corrosion rates at higher temperatures. This happens because higher temperatures lower oxygen solubility in test solutions and increase water evaporation. These effects reduce reactant availability for the corrosion process.

Oxygen Concentration Variability in Immersion Testing

Oxygen concentration is a vital yet often overlooked variable in immersion corrosion testing. Oxygen works as the terminal electron acceptor in anodic reactions, and its availability directly controls corrosion kinetics. Immersion testing faces these challenges:

- Oxygen concentration decreases with immersion depth

- Dissolved oxygen levels fluctuate with temperature changes

- Nitrogen purging (used in some tests) reduces dissolved oxygen from 4 ppm to 0.8 ppm while lowering solution temperature

This variability creates contradictory results. Corrosion current density drops nearly tenfold (from 201.5 µA·cm⁻² to 22.3 µA·cm⁻²) when dissolved oxygen falls from 4 ppm to 0.8 ppm. Accurate corrosion assessment of powder metallurgy and metal injection molded components requires careful control and reporting of these oxygen concentration variables.

Test chambers that ignore these environmental variables produce misleading data. This can lead to catastrophic material failures in ground applications.

Material Surface Conditions Before Testing

Surface preparation plays a key role in corrosion testing accuracy, but people often overlook it. Test results can vary widely between similar materials. The root cause usually lies in the test specimens’ inconsistent surface conditions rather than the testing method itself.

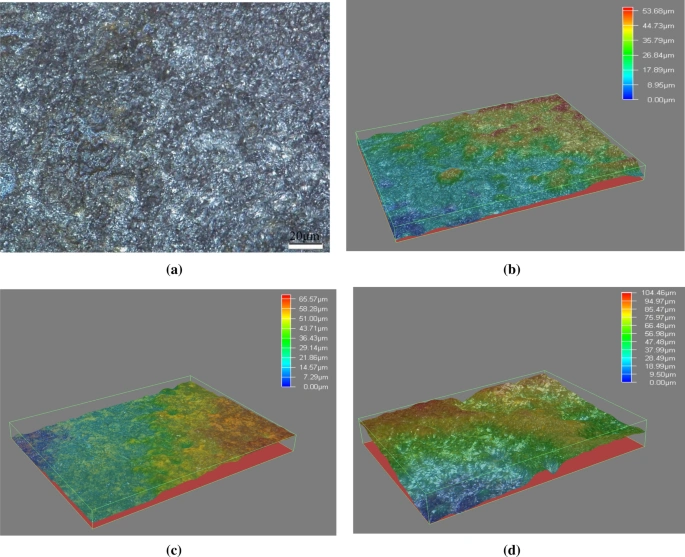

Surface Roughness and Its Effect on Corrosion Rate

Surface roughness plays a huge role in how materials corrode. A better surface finish leads to stronger corrosion resistance. Studies show that corrosion rates drop as the surface finish gets better. This becomes even more obvious with powder metallurgy and metal injection molded stainless steel parts due to their natural porosity.

Metastable pitting sites explain how these two things relate. Smoother surfaces have fewer spots where pits can start, which makes them less likely to corrode. Research on the link between roughness (Ra) and corrosion resistance reveals that higher Ra values lead to exponential increases in mass loss after 24-hour salt spray exposure.

Surface topography affects corrosion in several ways:

- Deep valleys in rough surfaces block corrosive ions from spreading

- Smooth surfaces help create more stable passive films

- Rough areas speed up corrosion through uneven air exposure

Research on stainless steel samples shows that smoother surfaces boost both pitting potential and the material’s ability to repair itself. The relationship works in a straight line – less roughness at the start means better resistance to pitting during corrosion tests.

Residual Stress from Machining or Forming

Manufacturing processes leave behind internal stresses in materials that stick around even after removing external loads. These residual stresses throw off corrosion test results, especially when checking for stress corrosion cracking (SCC). They create imbalances that change how materials behave during testing.

Machine work adds substantial internal stress. A study of austenitic stainless steel showed that milling created stresses from -548 MPa (pushing in) to +542 MPa (pulling out). Such big differences naturally skew test results. Pulling forces particularly affect stress corrosion cracking at right angles to the stress direction.

Heat treatments can really change how these stresses spread out. Scientists tested laser-fused stainless steel powder and found that heating it to 800°C and 1,200°C cut down stress and stopped cracking after 300 hours in liquid. Samples heated to 600°C cracked just like untreated ones.

Contaminants and Oxide Layers on Stainless Steel Surfaces

Surface contamination can make test results unreliable. Iron contamination on stainless steel might not be visible but can cause misleading rust marks during testing. These marks don’t show the material’s true corrosion resistance.

This happens when specimens touch carbon steel tools or surfaces during handling or making. Even tiny iron bits stuck in stainless steel’s surface can start localized corrosion. Getting reliable test results means cleaning specimens thoroughly to remove all iron contamination.

The passive oxide layer on stainless steel surfaces matters just as much. Stainless steel needs clean surfaces and enough oxygen exposure to maintain this protective layer. Tests using specimens with damaged or incomplete oxide layers will show poor corrosion resistance that doesn’t match how the material really performs.

Passivation – soaking specimens in acid solutions like nitric acid – removes contaminants and helps form protective oxide layers faster. Skipping this step can make materials fail early in testing, especially with powder metallurgy and metal injection molded products where measuring surface area gets tricky.

Test Setup and Equipment Calibration Errors

Properly adjusted equipment creates the foundation for reliable corrosion test results. Small deviations in test setup can guide you toward seriously flawed data. These problems often stay hidden until products fail during ground applications.

Inconsistent Salt Spray Nozzle Pressure in ASTM B117

Salt spray testing accuracy depends on keeping steady spray parameters. Research proves collection rates must stay at 1.5 (±0.5) mL/h in the measuring bucket to meet standard requirements. Many labs don’t deal very well with uneven spray distribution because of changing nozzle pressure.

These problems come from several sources:

- High spray pressure creates particles too small to settle evenly on sample surfaces

- Low pressure creates uneven spray patterns with different concentrations in different areas

- Wrong spray angles hit some specimen areas with strong spray, while others barely get covered

Testing equipment needs regular adjustments and upkeep to fix these issues. You must check for blocked nozzles, look for leaks in spray pipes, and make sure salt spray concentration meets test standards by weighing salt mass carefully. Filter replacement should happen often since blocked filters cause most pressure problems.

Electrode Misalignment in Potentiodynamic Polarization

Electrochemical testing methods need precise electrode positioning. Studies show that poor test chamber seals can cause big swings in current readings that make test results useless. This becomes especially important when testing porous stainless steel parts made through powder metallurgy or metal injection molding.

You need a stable environment in the reaction vessel to get consistent readings. Research shows that ceramic disk capacitors between electrolytes and counter electrodes make measurements much more reliable. The 0.1 µF capacitors help smooth polarization curves by removing electrical noise that creates misleading data spikes.

Precise adjustments and proper alignment of electrochemical testing setups will give accurate measurements needed for reliable corrosion assessment.

Uncalibrated Load Cells in Stress Corrosion Cracking Tests

Stress corrosion cracking (SCC) tests often use proof rings as constant load devices. NACE TM0177-2016 standards say these devices must create ring deflections above 0.6% of the ring diameter but not below 0.51 mm. You need an external loading device that deflects the proof ring while measuring applied loads accurately.

Digital micrometers and load measurement systems must keep traceable calibration. Each load cell and amplifier should work as one calibrated unit for measurement accuracy. Poor load cell adjustments directly affect stress corrosion cracking evaluations and can lead to:

- Wrong measurements are causing product defects and inconsistencies

- Safety risks from incorrect pressure, temperature, or flow readings

- Inefficient operations that increase maintenance costs

Regular calibration schedules must stay on track to ensure equipment produces accurate results within specified limits [20]. Labs testing powder metallurgy and metal injection molded components need these calibration protocols even more because of their unique material properties and porosity considerations.

Misinterpretation of Corrosion Test Data

Image Source: ResearchGate

The life-blood of reliable corrosion assessments depends on accurate data interpretation. Test results that are misanalyzed often guide incorrect material selection decisions and lead to premature component failures.

Overestimating Corrosion Resistance from Short-Term Tests

Short-duration corrosion tests can give misleadingly optimistic results. Stainless steel components exhibit rapid corrosion before forming a passive film, especially when you have components manufactured through powder metallurgy and metal injection molding. This early corrosion behavior creates a serious challenge to interpret because the steady-state corrosion rate only reveals itself after prolonged testing—typically over 1,000 hours.

Many materials show aggressive original corrosion followed by reduced rates after passivation. Results from brief testing periods introduce errors by a lot when extrapolated. Tests should be designed for multiple periods to get meaningful data instead of assuming linear corrosion progression.

Ignoring Localized Pitting in Average Weight Loss Calculations

Weight loss measurements can calculate uniform corrosion but prove dangerous and inadequate to evaluate localized attack. Component failure through perforation and leakage often happens from minute pinholes caused by pitting corrosion, while showing minimal weight loss. Yes, it is true that traditional weight-loss calculations underestimate the real corrosion threat when localized corrosion dominates.

Apparent increases in corrosion rate before stable grain dropping are calculation artifacts rather than actual material behavior changes. These misinterpretations come from uncompensated increases in actively corroding surface area.

Misreading EIS Nyquist Plots in Coated Samples

Electrochemical Impedance Spectroscopy (EIS) gives valuable insights, but people often misinterpret it. Nyquist plots have two major disadvantages: nondistinct display in high-frequency ranges and no direct frequency-impedance matching. Coated stainless steel specimens become challenging to interpret because of this.

Lissajous plots help verify if the electrochemical system meets linearity constraints (non-linear systems show distorted shapes) and time-invariance (unstable systems show mobile plots). Data scattering increases by a lot with smaller sample areas.

Phase angle interpretation comes with its own pitfalls. The phase angle at 10 Hz (θ10 Hz) fails to evaluate higher delamination area ratios properly, which can lead to wrong conclusions about coating degradation.

Material-Specific Anomalies in Powder Metallurgy and MIM Products

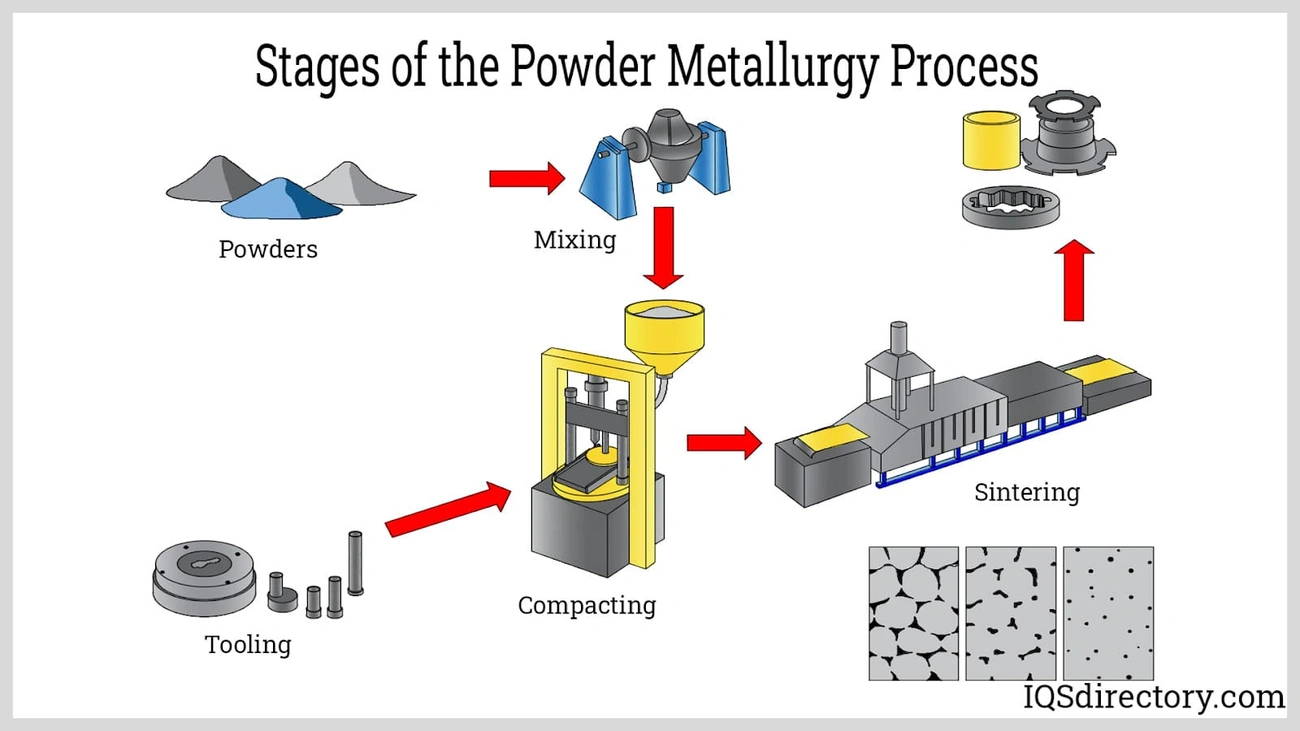

Powder metallurgy and metal injection molding (MIM) products create unique challenges for corrosion tests. These tests can yield misleading results if not factored in properly. Standard testing protocols don’t deal very well with manufacturing-specific characteristics that create anomalies.

Porosity-Induced Crevice Corrosion in MIM Stainless Steel

MIM stainless steel components’ inherent porosity creates perfect conditions that accelerate crevice corrosion. ME Steel 316L shows unstable corrosion resistance compared to its wrought counterpart. This happens because of δ-ferrite in the microstructure and macroscopic porosity working together. Corrosion attack concentrates in the pore areas near powder particle contact zones. The distinct solution chemistry develops within these confined spaces and drives the corrosion process. A decrease in pH value combined with higher chloride ion concentration, changes the open circuit potential negatively. Metal ion hydrolysis generates acidity first. Chloride ions then move inward to maintain electroneutrality, which creates an increasingly aggressive environment.

Sintering Defects Affecting Corrosion Measurement Accuracy

Sintering parameters affect corrosion test reliability dramatically. Higher sintering temperatures result in lower relative porosities and boost corrosion resistance. Specimens sintered under Ar+H2 atmosphere at 1370°C showed minimal susceptibility to intergranular corrosion. The atmosphere selection proves critical during the sintering process. Ar+H2 mixtures create low porosity with minimal oxygen and nitrogen contamination. Laser power in additive manufacturing affects porosity by a lot. Dense microstructures emerge with reduced porosity when power increases. Higher scan speeds increase porosity because of insufficient laser energy.

Surface Area Normalization Challenges in Porous Coupons

True surface area measurement of porous specimens presents a fundamental challenge for corrosion tests. Traditional measurement techniques have experimental uncertainties reaching 30-50%. Porosity assessment becomes exceptionally complex for thin nanostructured films. The absence of bulk quantity rules out classical characterization techniques. Electrochemical methods used for surface area determination add their uncertainties. Reference values for capacitance vary with surface chemistry, electrolyte type, and applied potential. Reliable corrosion rate calculations require recognition and proper handling of these surface area determination challenges.

Conclusion

The Path Forward: Ensuring Reliable Corrosion Testing

Reliable corrosion testing needs us to spot hidden factors that affect test results by a lot. This analysis reveals several key variables that determine corrosion test accuracy. Test outcomes often lead to wrong conclusions about material performance due to environmental conditions, surface preparation, equipment fine-tuning, data interpretation methods, and material-specific properties.

Corrosion specialists must know that environmental variables create big differences in test results. Temperature changes, humidity swings, and oxygen levels play a crucial role. These become even more important when you test powder metallurgy and metal injection molded components because porosity adds complexity. The material’s surface conditions need careful thought too. Surface roughness, residual stress, and contaminants change corrosion behavior whatever the material’s basic properties might be.

Equipment fine-tuning errors make these challenges worse. Bad test results come from uneven salt spray nozzle pressure, misaligned electrodes, and poorly adjusted load cells. Data interpretation mistakes are just as bad. Teams often put too much faith in material performance by overestimating corrosion resistance from quick tests and missing localized pitting issues.

Combined corrosion testing approaches work well, especially when you have powder metallurgy and metal injection molded stainless steel products. These methods merge multiple test protocols to give a complete performance picture. Engineers should use complementary techniques instead of one test method. This helps account for PM and MIM components’ unique traits – their natural porosity, sintering defects, and complex surface areas.

No testing protocol removes all variables completely. Yet dealing with these hidden factors makes results more reliable. Scientists who control these variables make better predictions about material performance. This ended up cutting the $2.5 trillion yearly global cost of corrosion damage. Without doubt, better corrosion testing needs both new methods and a deeper grasp of these basic but often missed factors that shape test accuracy.

FAQs

Q1. How do environmental factors affect corrosion test results? Environmental factors like humidity, temperature, and oxygen concentration can significantly impact corrosion test outcomes. Even small fluctuations in these variables can lead to substantial differences in corrosion rates, potentially skewing test results.

Q2. Why is surface preparation important for corrosion testing? Surface preparation is crucial because factors like surface roughness, residual stress, and contaminants can dramatically influence corrosion behavior. Inconsistent surface conditions between test specimens can lead to vastly different results, even for identical materials.

Q3. What are common equipment calibration errors in corrosion testing? Common calibration errors include inconsistent salt spray nozzle pressure, electrode misalignment in electrochemical tests, and uncalibrated load cells in stress corrosion cracking tests. These errors can significantly impact the accuracy of test results.

Q4. How can misinterpretation of corrosion test data lead to incorrect conclusions? Misinterpretation often occurs when overestimating corrosion resistance from short-term tests, ignoring localized pitting in weight loss calculations, or misreading EIS Nyquist plots for coated samples. These mistakes can lead to incorrect material selection decisions and premature component failures.

Q5. What unique challenges do powder metallurgy and metal injection molded products present in corrosion testing? These products have inherent porosity that can lead to accelerated crevice corrosion. Sintering defects can affect measurement accuracy, and surface area normalization is challenging due to the porous nature of the specimens. These factors require special consideration during testing and data interpretation.